About the Reading Group

Diffusion LLMs are faster, more controllable successors to traditional LLMs and are rapidly gaining adoption. This reading group aims to build a community for exchanging and debating emerging ideas in this space. While our primary focus is discrete diffusion models for language, we also invite work that extends these methods to other modalities and applications—such as molecular design, drug discovery, and beyond. Each session features an author-led presentation followed by Q&A, with recordings shared on our YouTube channel.

Paper Discussions

Authors present their work followed by discussions and Q&A sessions

Recorded Sessions

All sessions are recorded and available on YouTube

Community

Stay informed through our email list and Twitter/X

Meet the Organizers

Subham Sahoo

Holds a Ph.D. from Cornell Tech, where he specialized in Diffusion Language Models. He has made foundational contributions to the field, with his work deployed at scale by Google, NVIDIA, and ByteDance across language generation and drug discovery.

Justin Deschenaux

PhD student in Machine Learning at EPFL, advised by Prof. Caglar Gulcehre. Previously interned at Apple MLR. His research interests include diffusion language models, fast generative models, and generalization.

Latest Sessions

View All Sessions

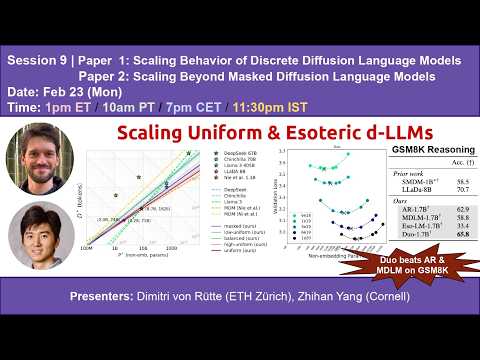

S9 | Scaling Discrete Diffusion Language Models

Dimitri von Rütte (ETH) and Zhihan Yang (Cornell) present two papers on scaling laws of discrete diffusion LLMs that challenge the dominance of Masked Diffusion.

Dimitri von Rütte (ETH) and Zhihan Yang (Cornell) present "Scaling Behavior of Discrete Diffusion Language Models" (https://arxiv.org/abs/2512.10858) and "Scaling Beyond Masked Diffusion Language Models" (https://www.arxiv.org/abs/2602.15014), two recent papers presenting systematic scaling laws of uniform-state and hybrid discrete diffusion LLMs. Importantly, both papers challenge the dominance of Masked Diffusion.

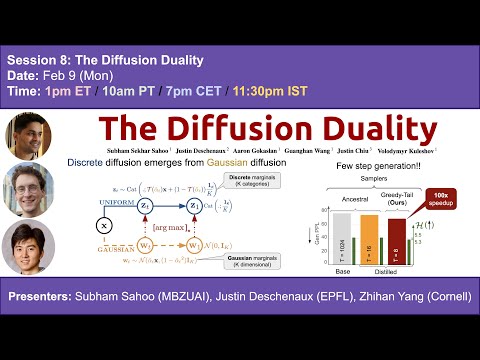

S8 | The Diffusion Duality

Today, Subham Sahoo (IFM), Justin Deschenaux (EPFL) and Zhihan Yang (Cornell) are presenting The Diffusion Duality (ICML 2025)

Uniform-state discrete diffusion models hold the promise of fast text generation due to their inherent ability to self-correct. However, they are typically outperformed by autoregressive models and masked diffusion models. In this work, we narrow this performance gap by leveraging a key insight: Uniform-state diffusion processes naturally emerge from an underlying Gaussian diffusion. Our method, Duo, transfers powerful techniques from Gaussian diffusion to improve both training and sampling. First, we introduce a curriculum learning strategy guided by the Gaussian process, doubling training speed by reducing variance. Models trained with curriculum learning surpass autoregressive models in zero-shot perplexity on 3 of 7 benchmarks. Second, we present Discrete Consistency Distillation, which adapts consistency distillation from the continuous to the discrete setting. This algorithm unlocks few-step generation in diffusion language models by accelerating sampling by two orders of magnitude.

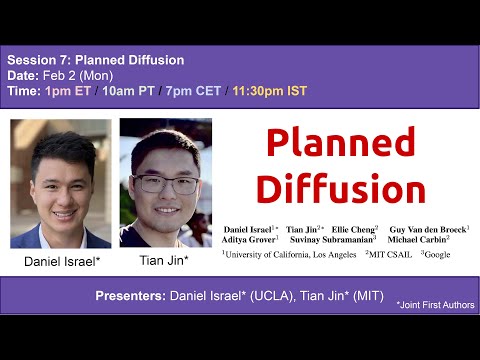

S7 | Planned Diffusion

Daniel Israel and Tian Jin discuss Planned Diffusion. Planned diffusion speeds up text generation by planning with an autoregressive model and then generating multiple spans in parallel with diffusion while keeping quality nearly the same.

Daniel Israel and Tian Jin discuss planned diffusion, a hybrid text generation method where a language model first creates a short autoregressive “plan” that splits output into independent spans, then generates those spans in parallel with diffusion, achieving significantly faster generation while maintaining near-autoregressive quality.

Latest Relevant Videos

View All Videos

But How Do Diffusion Language Models Actually Work?

Jia-Bin Huang explores several ideas for applying diffusion models to language modeling

Most Large Language Models (LLMs) today are based on Autoregressive models (i.e., they predict texts in a left-to-right order). But diffusion models offer iterative refinement, flexible control, and faster sampling. In this video, we explore several ideas for applying diffusion models to language modeling.

Simple Guidance Mechanisms for Discrete Diffusion Models

Simple Guidance Mechanisms for Discrete Diffusion Models (ICLR 2025 video)

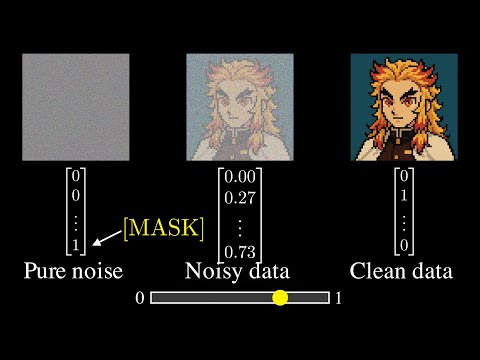

Diffusion models for continuous data gained widespread adoption owing to their high quality generation and control mechanisms. However, controllable diffusion on discrete data faces challenges given that continuous guidance methods do not directly apply to discrete diffusion. Here, we provide a straightforward derivation of classifier-free and classifier-based guidance for discrete diffusion, as well as a new class of diffusion models that leverage uniform noise and that are more guidable because they can continuously edit their outputs. We improve the quality of these models with a novel continuous-time variational lower bound that yields state-of-the-art performance, especially in settings involving guidance or fast generation. Empirically, we demonstrate that our guidance mechanisms combined with uniform noise diffusion improve controllable generation relative to autoregressive and diffusion baselines on several discrete data domains, including genomic sequences, small molecule design, and discretized image generation.

Simple Diffusion Language Models

Quick introduction to Masked Diffusion Language Models (MDLM) by Alexander Rush

Quick introduction to Masked Diffusion Language Models (MDLM) by Alexander Rush

Stay Updated

Join our community and never miss a session